Once engineers came up with the way to virtualize graphics processing units (GPUs), the new era started for machine learning, gaming, modeling, and whatever else IOPS-hungry: all these applications can now go cloud! In this article, I’d like to take a closer look at why GPU virtualization is so promising, who pioneers this tech, and how VMware managed to cover the gap between the virtual and bare-metal GPUs.

A quick recap on GPU virtualization

Sharing GPUs helps flexibly respond to users’ demands in this type of hardware without sacrificing application performance and the overall cost efficiency of IT environments. Imagine a research group doing some machine learning where scientists have high-performance computing (HPC) cluster in their disposal. Training workloads are mostly run by scientists during research and development phases. Nobody runs intense machine learning/deep learning workloads around the clock; scientists have a lot of other things to do, like answering emails, cleaning data, and developing new ML algorithms. All that means that demand for GPUs by each user is highly irregular: sometimes users fight for GPU resource, sometimes the hardware idles. Here’s why sharing graphics devices among users is a good idea if a farm of GPUs is overkill.

What VMware changed

Few additions to own tech plus a couple of third-party technologies allowed VMware to achieve incredibly low performance overhead due to virtualization.

Blast Extreme

First, VMware has added support for Blast Extreme – a remote display protocol designed to deliver graphics-intense virtual workloads – to VMware Horizon. The protocol offers the lowest CPU and power consumption. And, it works over TCP and UDP.

Tweaking ESXi configuration

Second, VMware disabled vMotion and introduced DirectPath I/O to eliminate any performance differences between bare-metal GPUs and virtual ones.

Why turn off vMotion? vMotion shuffles VMs around the whole environment to do load balancing across a system and avoid application downtime. Going from host to host, VMs are given RAM and CPU resources they need. For compute-intensive work though, it is vital to avoid overhead; vMotion has to be turned off. By disabling this feature, VMware literally pinned VMs to specific GPU. In this way, no performance is lost due to data transfers during migration, meaning more efficient utilization of GPUs.

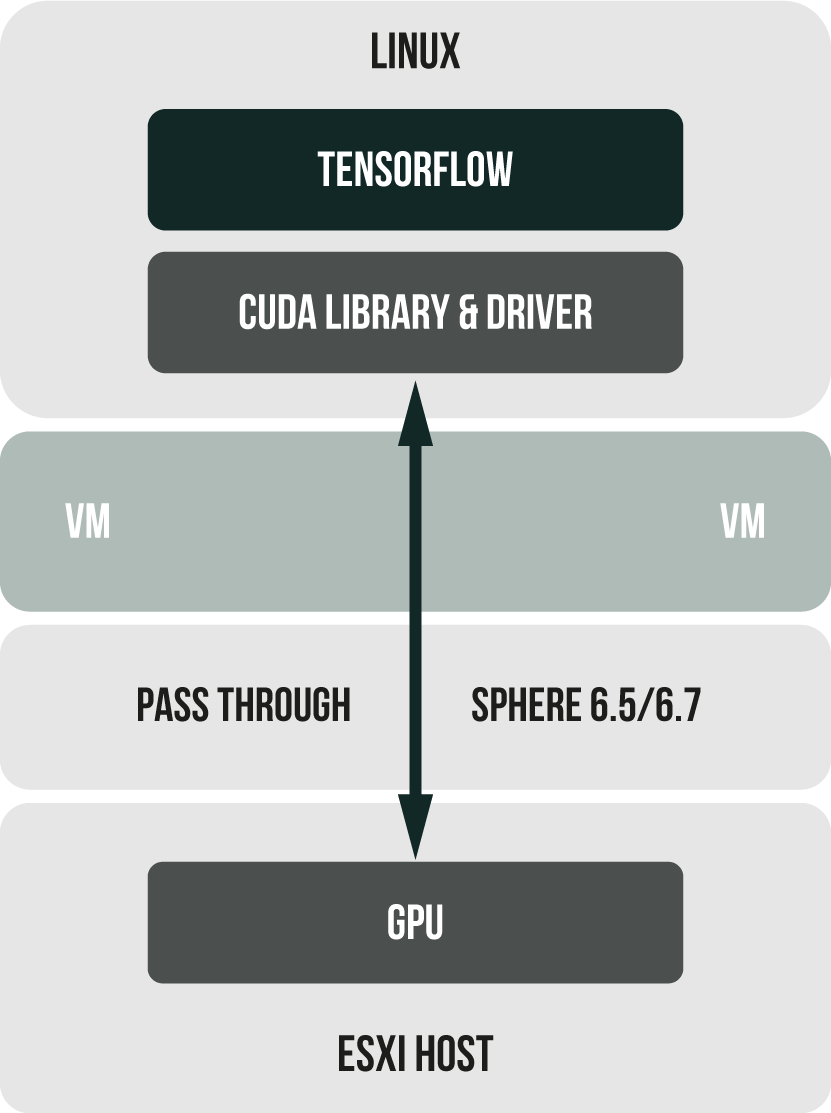

Turning on DirectPath I/O enables the direct connection between a VM and CUDA driver, bypassing the hypervisor. This approach connects one VM to the specific GPU. Here’s a quick drawing of how DirectPath I/O works.

FlexDirect

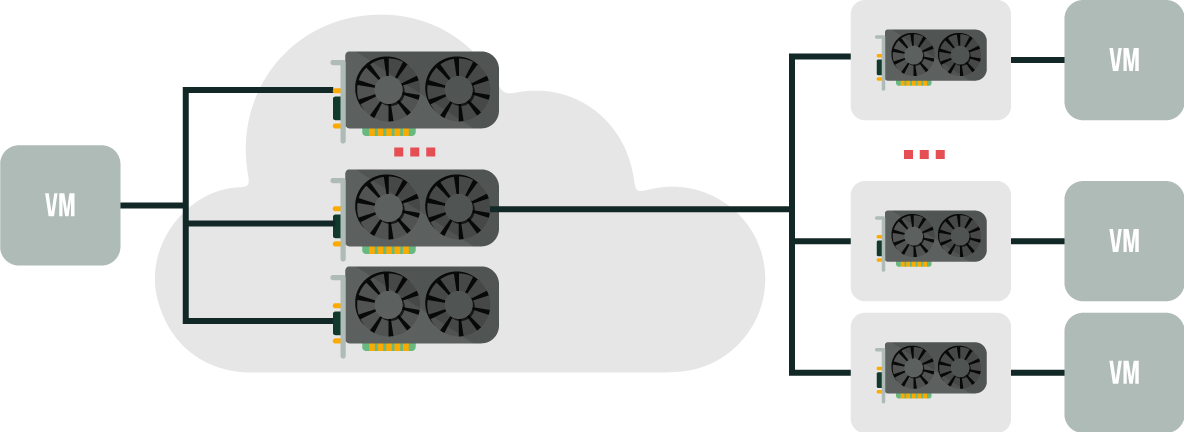

Third, VMware adopted Bitfusion’s FlexDirect – a technology that enables the hypervisor to attach and manage multiple GPUs. VMware knew that DirectPath I/O works great only if you have a single VM talking to a dedicated device. If there’s something GPU hungry running in that VM and it needs more power, GPUs are getting clustered with FlexDirect.

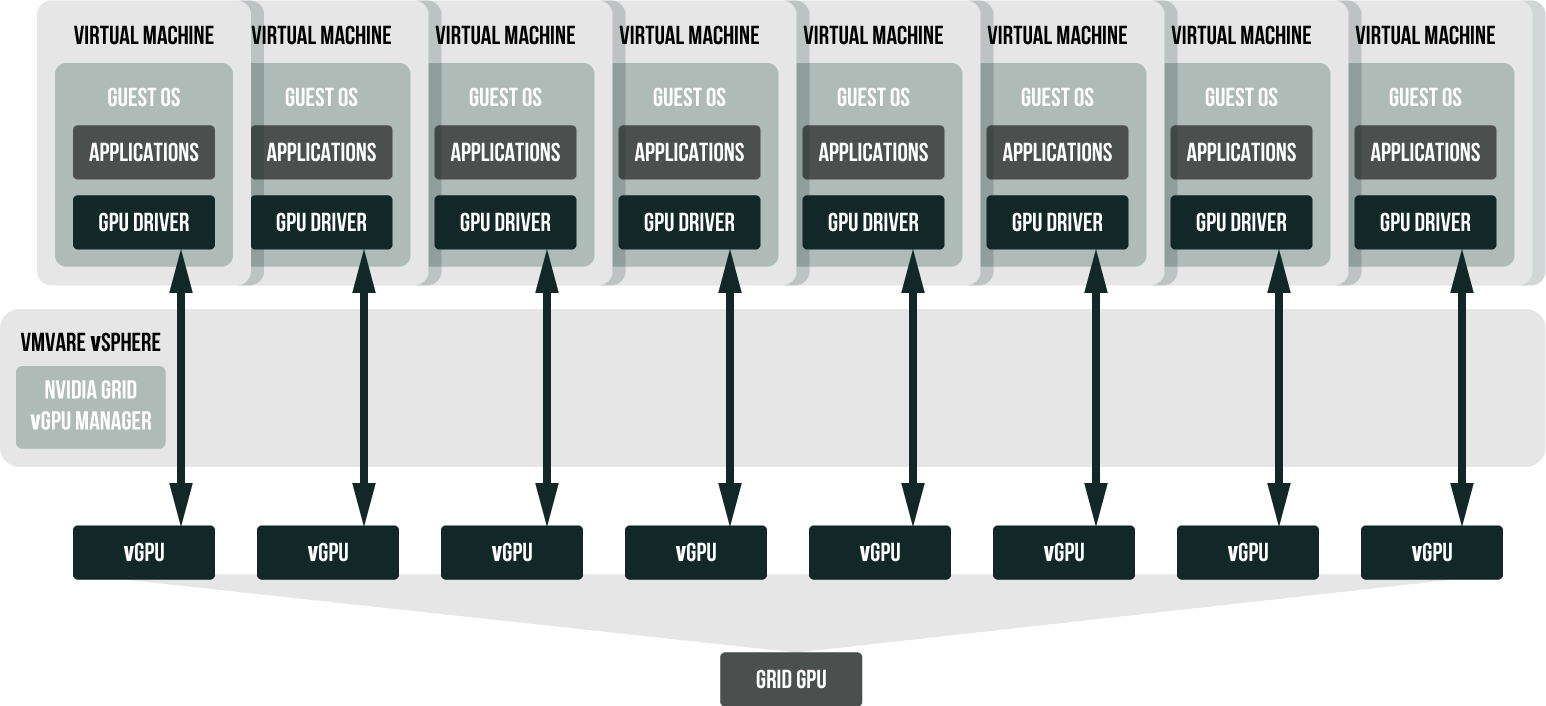

Nvidia GRID

Fourth, vSphere supports Nvidia GRID, which is fit for multiple types of workloads. GRID enables to carve up a GPU at multiple virtual devices so that each user is provided own resource. Previously, this technology supported GPU virtualization only for graphics workloads. Since introduction of Pascal GPU, Nvidia GRID supports GPU virtualization for not only graphics but also things like machine learning/deep learning based on TensorFlow, Keras, etc. Keep in mind that only GPU local memory can be shared not the computational cycles.

Did VMware succeed in GPU virtualization?

Virtualization of GPU worked out great for VMware.

Training a language model based on TensorFlow resulted in 3-4% performance hit compared to a bare-metal implementation.

VMware also ran several performance tests after marrying its hypervisor with Singularity, a container tech for HPC workloads. In other words, they conjoined containers’ ability to make applications portable and VM’s ability to operate in a shared environment; sounds like an oxymoron, but VMware decided to try it. They embedded a single container per VM to prove that a GPU can be shared in such environment. VMware divided their test GPU into four pieces, running a vSphere-Singularity VM on each. For 17% performance trade-off, GPU showed three times higher throughput once shared.

VMware is not alone. Who’s else developing GPU virtualization?

AMD and Intel.

AMD’s bet is MxGPU which is based on SR-IOV. The main idea behind SR-IOV is virtualization of network adapters. AMD knows how to make it fit for GPU virtualization. The company reported that it managed to distribute GPU power equally among 16 users.

Intel develops a solution based on Citrix XenServer 7. Intel’s tech merges a VM and GPU driver. By virtualizing GPU in that way, Intel expects to enable hundreds of users to access GPU at a time.

What’s coming?

Nowadays, no one virtualizes rendering, modeling, simulations, and tasks related to machine learning. VMware breaks the glass ceiling by making its products fit for these purposes.

By 2022, this tech will bring manufacturers of HPC solutions $45 billion; that’s 29% higher than forecasts made two years ago. There may also come some solutions that make GPU virtualization more efficient.

Now, let’s get back to the hardware for a while. Engineers try to assemble graphics processors and CPUs on one chip to get the best of two worlds. This approach will incredibly boost data processing incredibly and ensure smart utilization of data center resources. It saves more space after all!